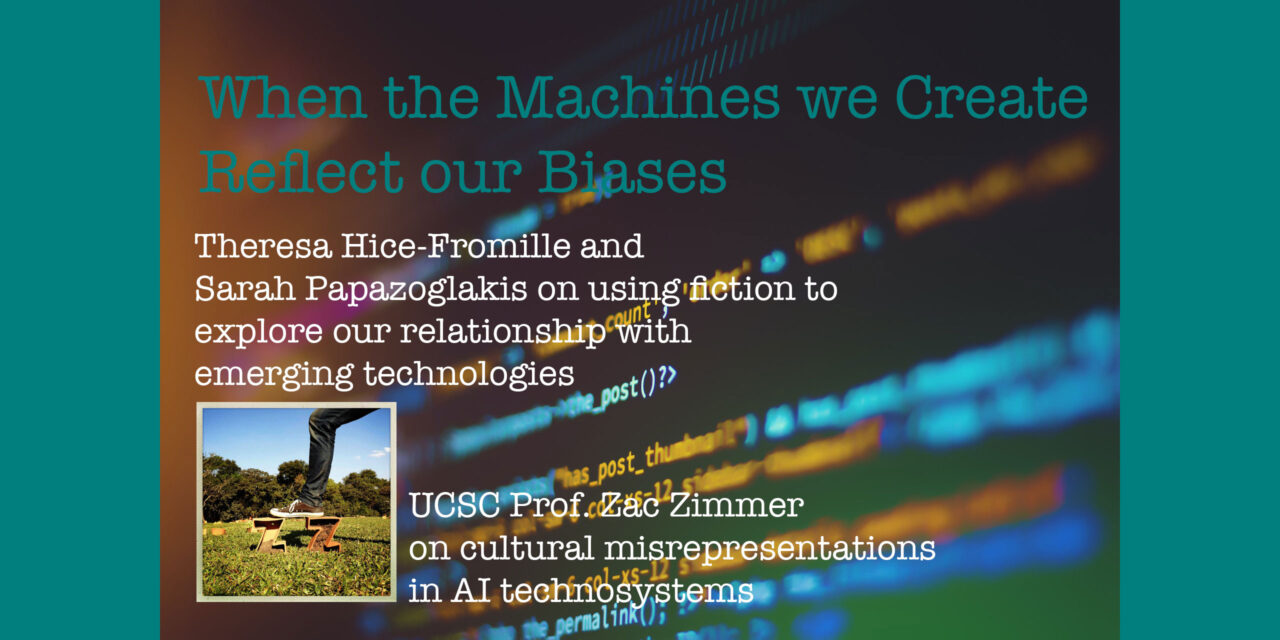

On Talk of the Bay, host Suki Wessling explores how the study of humanities intersects with the future of technology. First, Sarah Papazoglakis and Theresa Hice-Fromille speak about their research into how speculative fiction created by underrepresented communities can pinpoint hopes and concerns about technology. By studying and documenting the issues explored in speculative fiction, they have created recommendations for industry about how to create more inclusive and responsive technologies.

Then UCSC Professor Zac Zimmer joins to discuss the history that led to large language model artificial intelligence like ChatGPT, the problems that these systems pose for our culture, and the possible solutions to mitigate the risk of these systems. Because these systems were created on a model predicated on “fooling” humans into thinking that the AI is human—passing the Turing Test—they don’t actually serve as the best tools to help humans learn and express ourselves. Bringing the conversation back around to how emerging technologies affect the lives of real people, we discuss what can be done to make sure that technologies are developed in the interest of improving humanity.

Sarah Papazoglakis is a strategist and subject matter expert in privacy, ethics, and consumer trust. Prior to joining Reality Labs at Meta, she served as an ethics and privacy advisor for Google and DeepMind and developed the Digital Privacy Policy for the City of San José. She holds a PhD in Literature with a designated emphasis in Critical Race and Ethnic Studies from UC Santa Cruz.

Theresa Hice-Fromille is a PhD candidate in Sociology with designated emphases in Critical race and ethnic studies and Feminist Studies. Doctoral research is in solidarity with community organizations that take Black youth abroad and focuses on the legacies of decolonial education in Black radical organizations and diasporic travel. Incoming Assistant professor of Geography at Ohio State University in fall 2023.

Zac Zimmer is an Associate Professor of Literature at UC Santa Cruz. His research focuses on the interdisciplinary study of literature, culture and technology in the hemispheric Americas. His book First Contact: Speculative Visions of the Conquest of the Americas, is forthcoming.

For further exploration:

- Humanizing the metaverse

A Humanities Institute fellowship brings trained humanists into high-tech companies, providing firms with creative and fluid thinkers while also giving scholars a view into how their expertise can be used in different positions. - The Avatar Manifesto

As avatars evolve to become more accurate digital representations of ourselves, there is a need to create guidelines and best practices on how we create and operate them. These guidelines are meant to be the start of the conversation (for developers, ethicists, designers, and platform operators) as our understanding and perception of embodiment evolve over time. These guidelines will always be rooted in the more persistent underlying principles. - Feeling good about feeling bad: virtuous virtual reality and the automation of racial empathy

- Sankofa City

“Sankofa City” is a community design project envisioning concepts and prototypes for the future of urban technology, such as augmented reality and self-driving cars. Based in Leimert Park in South LA, neighborhood residents and USC students work in teams to imagine alternative futures tied to local culture. This final video is based on 3 months of workshops and prototyping. - Race After Technology

“Race After Technology is a brilliant, beautifully argued, engagingly written, and groundbreaking work. Ruha Benjamin is that rare scholar whose sophisticated understanding of science and technology is matched by her deep knowledge of race and racialization. Here she guides us into fresh terrain for understanding and tackling the persistence of racial inequality. This book should be read by everyone committed to creating a more just world.” — Imani Perry, Princeton University - “Ten Inventions Inspired by Science Fiction”

- The ELIZA Effect

Throughout Joseph Weizenbaum’s life, he liked to tell this story about a computer program he’d created back in the 1960s as a professor at MIT. It was a simple chatbot named ELIZA that could interact with users in a typed conversation. As he enlisted people to try it out, Weizenbaum saw similar reactions again and again — people were entranced by the program.

- “ChatGPT is a Blurry JPEG of the Web“

“I think that this incident with the Xerox photocopier is worth bearing in mind today, as we consider OpenAI’s ChatGPT and other similar programs, which A.I. researchers call large language models.” - “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜”

In this paper, we take a step back and ask: How big is too big? What are the possible risks associated with this technology and what paths are available for mitigating those risks? We provide recommendations including weighing the environmental and financial costs first, investing resources into curating and carefully documenting datasets rather than ingesting everything on the web, carrying out pre-development exercises evaluating how the planned approach fits into research and development goals and supports stakeholder values, and encouraging research directions beyond ever larger language models.